The challenges of developing ML solutions for high resolution imagery

Creating a cutting-edge Machine Vision platform that can efficiently handle large-scale imagery is really challenging. This software should seamlessly integrate big data, machine learning, and advanced computing techniques to cater to analysts working with microscopic, digital twin, or remote sensing imagery. In our quest to develop such a platform, we have encountered numerous challenges and obstacles over the past 5 years. Today, we are excited to share with the community the difficulties we have faced and the ingenious solutions we have devised.

1. How to process large imagery and why is it so challenging?

The well-known big images, remote sensing images, are often captured in the GeoTIFF format, which is not supported by web browsers. This creates a challenge for the server to convert the images into a compatible format for users to view them on a web app like Deep Block. The conversion process must be efficient and fast, without resulting in a delay or timeout in the response time to the HTTP request. This requires background processing and notification to the user once the conversion is complete. To reduce the processing time, we have implemented patented algorithms and have optimized the platform's architecture to ensure quick response times, even for large size images.

2. How do you apply machine learning technologies to such large images?

When analyzing these images, it is essential to divide them into smaller pieces. Otherwise, individuals without knowledge of computer vision or machine learning may attempt to put the image into an open-source AI model without doing anything. However, this approach will not work as the model would simply resize the image, resulting in a loss of important features.

However, when attempting to split this large image, numerous challenges arise. Some individuals may claim that dividing and processing these images is a simple task, but they lack the expertise and knowledge required to develop the sophisticated software that we have created. In the following sections, we will deeply understand the challenges posed by image division and explain how we have overcome them.

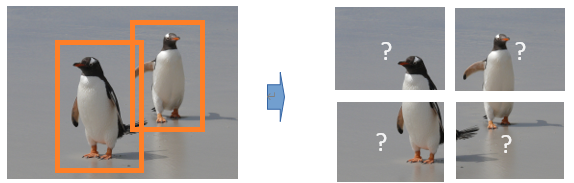

3. Problem of division - Missing part of objects

When the image is divided, only parts of objects remain at the border. While there is a chance that a machine learning model can still recognize these fragmented objects, it is generally challenging for machine vision models to comprehend partial objects.

Some machine learning engineers will say, "I will implement an algorithm to fix this problem."

However, the question remains, "How exactly will you do that?"

4. More problems that arise while dividing the images

To tackle this challenge, we have developed our own patented algorithm that effectively solves the problem.

However, implementing an algorithm is just the beginning. As we divide an ultra-high image, A huge number of files are created. Additionally, the speed of reading a file is significantly slow compare to reading data loaded in RAM or cache. To handle this, we have incorporated advanced software engineering technologies.

5. Once processed, how to display ultra-high-resolution images?

In some cases, satellite or microscope images can exceed 10 GB, with a resolution exceeding 100K. This poses significant challenges when rendering the image on the browser. To handle this, we have implemented specialized algorithms that break down the image into smaller sections, which are rendered on the client-side using HTML's "canvas" component. We have also utilized various caching techniques to ensure quick access to previously rendered images and implemented specialized algorithms to ensure smooth and seamless rendering.

6. How to overcome canvas limitations?

As seen in the previous section, HTML's canvas components are used to render and manipulate images. However, the recommended maximum size of the image resolution that can be rendered on a single canvas is 5000x5000 pixels. To overcome this limitation, we have implemented specialized algorithms that split large images into smaller sections, which can be rendered on multiple canvases simultaneously. We have also developed innovative techniques that use pre-rendering to create an image mosaic on the server-side, which is then served to the client-side for viewing.

However, modern web browsers have memory usage limitations, with most web pages restricted to less than 50MB of memory. Past 128MB, the browser starts suffering greatly. This poses challenges when rendering ultra-high-resolution remote sensing images in our interface. Therefore, we only load the necessary sections of the image into memory, reducing the memory footprint of the application. Additionally, since we utilize cloud services to store and process these images, this frees up the client-side application's memory.

7. Then, why choose to develop a web-based Platform?

Despite web browser limitations, Deep Block’s Computer Vision platform must be web-based to allow users around the world to access the service. Otherwise, we would need to use CPP, or Python QT to make an end-user software, and except for CPP, other technologies are not popularly used. Web apps, on the contrary, offer infinite flexibility in layout and design and preset many benefits when designing and maintaining the platform.

8. Why is parallel processing required to analyze super-resolution images?

As explained in a previous article, in order to analyze these super-high-resolution images, they must be divided into multiple sections. Division results in overhead since cutting this image creates multiple files, and processing these multiple images naturally requires more time than processing one image. We use parallel processing to maintain high service speed. However, implementing such techniques is not easy and requires significant expertise. We have developed specialized algorithms to ensure that multiple instances of the same code can be executed simultaneously on multiple processors or nodes, reducing the overall processing time. In other words, we use distributed computing techniques to parallelize the processing of large images. This approach distributes the image processing tasks across multiple computing nodes, allowing us to process large images more quickly.

Particularly, GeoTIFF images also contain geospatial metadata and objects in these images often appear small, requiring preprocessing and various algorithms to recognize them. To address this challenge, we have developed custom algorithms that preprocess the geospatial images to extract the relevant information. These algorithms help to identify specific objects within the image, such as buildings or vehicles, which can then be labeled and extracted for further analysis.

9. How to choose the right experts to support you?

The analysis of high-resolution images is a challenging task, given their large size and resolution, which make them a form of big data. Combining machine learning and technologies like parallel computing with big data exacerbates this challenge, and it is almost impossible for natural scientists, and GIS analysts to tackle it alone.

Furthermore, developing working software and implementing machine learning algorithms is not enough. The industry generates innumerable images each day, and the world is constantly changing. Therefore, the software must be user-friendly and able to analyze input images quickly to ensure a seamless user experience. Speed and user experience are crucial factors that researchers and individuals sometimes overlook, failing to acknowledge the importance of software engineering and computer software technology. If asked to design software architecture similar to Deep Block, they may not even know where to start.

Geospatial and manufacturing industries are lagging behind in terms of artificial intelligence and are heavily dependent on manual labor for various analysis tasks. However, Deep Block, with its extensive expertise in computer science, aims to tackle these challenges by creating user-friendly software that efficiently processes images. By leveraging advanced algorithms and technologies, Deep Block offers a solution that not only enhances the speed of image processing but also ensures a seamless user experience.