Importance of Early Stopping & Appropriate Epoch

Learn how to effectively train computer vision models with this comprehensive guide. Discover the importance of early stopping and how to set the appropriate epoch for optimal results.

Understanding Computer Vision Model Training

Computer vision model training is a crucial step in building accurate and robust computer vision models. It involves training a neural network to learn from a large dataset of labeled images, enabling it to recognize and classify objects or patterns in new, unseen images. This process requires careful consideration of various factors, such as the architecture of the model, the quality and diversity of the training data, and the optimization techniques used.

To understand computer vision model training, it is important to have a basic understanding of neural networks and deep learning. Neural networks are computational models, composed of interconnected equations. Deep learning, a subset of machine learning, focuses on training deep neural networks with multiple layers of equations to learn features of data. Modern computer vision models utilize deep learning techniques to analyze and interpret visual data.

The training process involves adjusting the parameters of the model, such as the weights and biases of the neurons(equations), to improve the model's performance. This is achieved through a process called backpropagation. You can learn this easily in our FREE Deep Learning Course.

The Role of Early Stopping in Model Training

Early stopping is a technique used in model training to prevent overfitting and improve generalization performance. Overfitting occurs when a model becomes too specialized in the training data and fails to generalize well to new, unseen data. This can lead to poor performance on real-world tasks.

By stopping the training early, the model is prevented from memorizing the training data and instead learns to generalize from it. This helps to improve the model's ability to perform well on new, unseen data.

Setting the appropriate epoch for early stopping is a crucial step in model training. Epoch refers to a complete pass through the training dataset. If the training process is stopped too early, the model may not have converged to the optimal solution and its performance may be suboptimal. On the other hand, if the training process is allowed to continue for too long, the model may start to overfit the training data.

Determining the appropriate epoch for early stopping requires monitoring the model's performance on the validation dataset and selecting the point at which the performance starts to deteriorate. Once the performance starts to plateau or decline, it is a good indication that the model has reached the appropriate epoch for early stopping.

By effectively utilizing early stopping and determining the appropriate epoch, you can prevent overfitting and improve the generalization performance of your computer vision models, leading to more accurate and robust predictions.

Determining the Appropriate Epoch in DeepBlock.net

Deep Block is a no-code machine learning model development platform that allows users to learn and use various machine vision models without coding.

Since Deep Block is designed so that even people who do not know coding or machine learning can use it, the service provides various functions for the convenience of users including the epoch configuration for model training.

1. Our computer vision model features an innovative design that significantly reduces the risk of overfitting, making it more forgiving when high epoch counts are employed. This means users can confidently use more epochs without worrying about the common pitfalls associated with them.

2. It is important to consider the amount of training data when determining the appropriate epoch for model training. As a general rule, if there is a large amount of training data, it is recommended to set the fewer epoch.

For instance, if there are more than 20,000 annotations prepared per category, setting the training epoch to around 10 or less would be sufficient. (early stopping)

On the other hand, if the training data is limited, with only a few hundred annotations for each class, it may be better to set the epoch to around 30.

By adapting the epoch based on the available training data, you can optimize the performance of your computer vision models and achieve more accurate results.

3. The service we provide allows users to use multiple GPU resources simultaneously so that they can reduce the time required to find an appropriate epoch using a trial and error(heuristic) method.

Let’s learn how to utilize Deep Block to find the optimal training epoch.

Deep Block offers access to cloud GPU resources that enable users to train their computer vision models effectively. The service subscription fee is charged based on the cloud resource usage, but DeepBlock.net is basically free to a certain amount of free GPU usage every month.

Free users can use 2 GPUs at once, while corporate customers have the flexibility to utilize even more GPUs.

If you're interested in harnessing the full potential of Deep Block's GPU resources for your model training, please don't hesitate to get in touch with us.

Now, let's see how we can simply train a model with various epochs with Deep Block.

- First, prepare a computer vision project with training data ready.

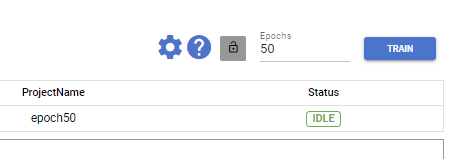

Let's say we have a project with 50 training epochs.

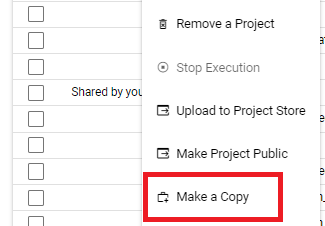

- Then, make many copies of the project in the console.

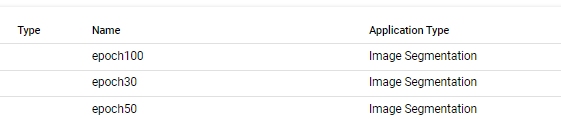

- As you can see, you now have projects of different epoch values.

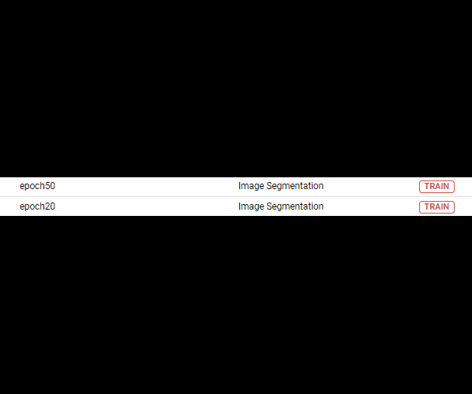

- Train these projects at the same time and check the model’s validation metrics after the model training with a different epoch value.

If you are a free user, you can use up to two at the same time, and if you want to use more, please contact us.