The Challenge and Importance of Similar Object Detection

Similar object detection is a deceptively difficult problem. Research on distinguishing visually similar categories has been ongoing for decades, yet in many industries, it remains a persistent and costly challenge. Unlike standard object detection, which typically deals with clearly distinct categories, similar object detection—or fine-grained object detection—demands that models separate objects that appear nearly identical in size, shape, and color.

Consider satellite imagery analysis in defense intelligence (IMINT). From a high altitude, a tank, a self-propelled howitzer, and an armored personnel carrier can look remarkably similar. Yet for an analyst, these vehicles carry very different operational implications and strategic values. Misidentifying them can distort threat assessments or lead to flawed decision-making. Likewise, in aerial reconnaissance, two aircraft with similar silhouettes may have entirely different roles and capabilities—one designed for transport, another for electronic warfare. The ability to correctly distinguish such assets from limited-resolution imagery can directly influence tactical and strategic outcomes.

This difficulty extends to commercial and industrial applications as well. Whether it’s identifying subtle variations in ship types in maritime monitoring, differentiating between similar semiconductor components in an inspection line, or recognizing species-specific traits in ecological surveys, the stakes are high. In each case, a wrong classification can have real-world consequences.

Why Similar Object Detection Is Especially Challenging

Traditional detection pipelines excel when visual differences between classes are pronounced—cars versus bicycles, cats versus dogs. In similar object detection, however, the differences are often small, context-sensitive, and easily lost in the noise of real-world conditions. The object’s geometry might differ only in a minor contour; the texture might vary in ways that are hard to capture at a distance; the distinguishing features may occupy only a fraction of the total bounding box.

At the extreme, in low-resolution optical satellite imagery or synthetic aperture radar (SAR) images, even the distinctive shapes of self-propelled howitzers and tanks become so ambiguous that it is nearly impossible for the human eye to reliably tell them apart. In such degraded or noisy imagery, the problem is further compounded by the presence of decoys—objects deliberately crafted out of balloons or other materials to mimic the appearance of real military assets. These decoys are so convincing that even highly trained analysts can easily be misled. Given this level of difficulty for humans, it is hardly surprising that artificial intelligence systems also struggle; if subtle distinctions cannot be readily perceived by an expert, achieving accurate separation through automated means becomes an almost insurmountable challenge.

This task—distinguishing between objects that are nearly identical in shape, size, and even surface texture—has long stood as one of the most difficult frontiers in computer vision. Countless real-world scenarios demand that such minute visual differences be detected and interpreted correctly, and these situations routinely expose not only the limitations of human perception but also the constraints of state-of-the-art machine learning algorithms. Unlike traditional object detection, which is bolstered by clear boundaries and obvious cues between categories, the automatic recognition and classification of visually similar objects demands a level of precision and granularity that far exceeds conventional approaches. The necessity for such sophisticated differentiation continues to push the boundaries of current technology and remains one of the foremost challenges in the field today.

Famous Benchmark Datasets

Several datasets have emerged to address and measure progress in fine-grained and similar object detection.

FAIR1M

A high-resolution remote sensing dataset containing over 15,000 images and more than a million annotated objects. FAIR1M categorizes objects into five broad classes—ships, vehicles, aircraft, and more—which are further divided into 37 fine-grained sub-classes. The use of rotated bounding boxes enables precise representation of orientation and shape, a key advantage in differentiating objects such as cargo ships versus oil tankers.

FGVC-Aircraft

Comprising around 10,000 images of 100 distinct aircraft models, FGVC-Aircraft challenges models to pick up on minute differences—slight variations in wing shape, tail design, or engine placement. While originally intended for classification tasks, the dataset can be adapted for detection by adding localization annotations.

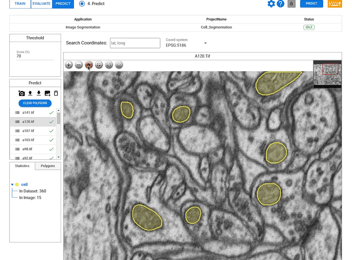

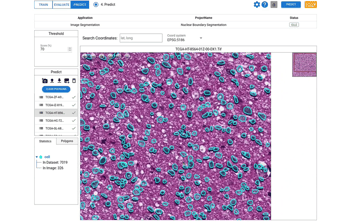

Techniques for Improving Similar Object Detection

Strong datasets are only part of the solution. Fine-grained scenarios require models that can integrate both holistic and highly localized features:

-

Metric learning approaches like ArcFace or CosFace encourage the model to create more discriminative embeddings for object crops, increasing separability between near-identical categories.

-

Part-based modeling directs attention to the most informative components—such as the turret of an armored vehicle, the bridge of a ship, or the tail assembly of an aircraft.

-

Scale-aware detection addresses cases where object size in target images differs from pre-training data, a known issue in small-object domains. Research from datasets like TinyPerson proposes methods for mitigating this mismatch.

Why This Matters Across Industries

The importance of similar object detection cannot be overstated, particularly in sectors where visual ambiguity has high consequences.

In defense and intelligence, the correct classification of military assets from aerial or satellite imagery can inform strategic planning, resource allocation, and threat assessments. Misclassification could lead to underestimating an adversary’s capabilities or misdirecting operational resources.

In maritime and transportation monitoring, accurate vessel or vehicle classification affects regulatory enforcement, port operations, and even environmental response planning.

These are not domains where “close enough” is acceptable. Errors can propagate through decision-making pipelines, turning small mistakes into major operational or financial consequences. As AI systems are increasingly deployed in mission-critical contexts, robust fine-grained detection capabilities become not just advantageous, but essential.